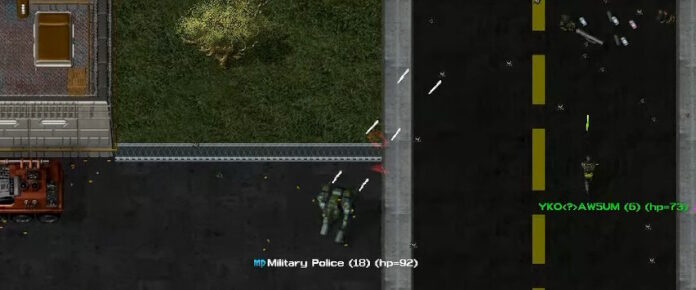

The Division just can’t catch a break.

Glenn Fiedler, a former lead network programmer for Sony and Respawn and current games tech consultant, says that videos of rampant client-side cheating in The Division make him suspect Ubisoft is using a trusted client network model. “I sincerely hope this is not the case, because if it is true, my opinion of can this be fixed is basically no. Not on PC. Not without a complete rewrite,” he says.

Fiedler explains that top-tier FPS games like Overwatch and Call of Duty use a networking model where the server itself doesn’t trust your client but rather takes your input and calculates it itself, all while minimizing your lag. But if The Division is indeed employing a network model that inherently trusts the client, then any ol’ random cheaterhead can screw with that client to create the messes we’re seeing right now.

“If a competitive FPS was networked the other way, with client trusted positions, client side evaluation of bullet hits and ‘I shot you’ events sent from client to server, it’s really difficult for me to see how this could ever be made completely secure on PC.”