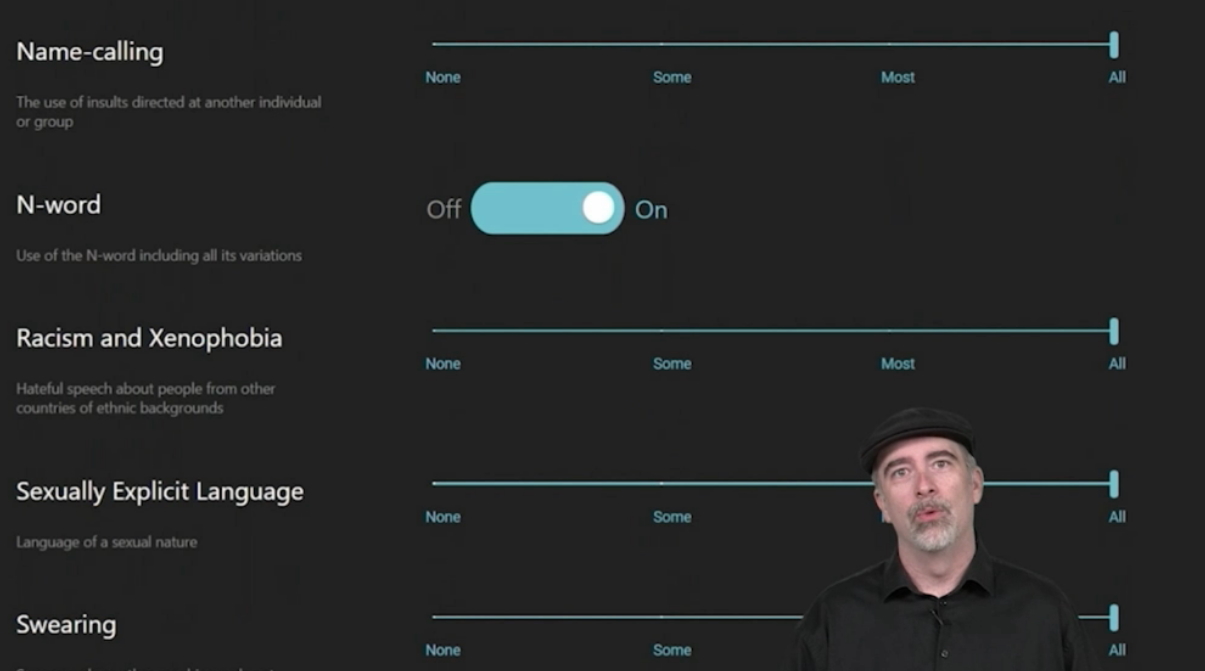

At Intel’s GDC 2021 panel “Billions of Gamers, Thousands of Needs” this week, Intel and Spirit AI announced a new end-user application designed “to detect and redact audio” based on a list of preferences. Called Bleep, the application seems to be a kind of Windows overlay, though the details were scant and there was no actual demo of the product. We did get to see a bit of the UI, though, with the app apparently having options to moderate a broad range of toxic topics starting from general name-calling and aggression to LBGTQ+ hate and xenophobia.

Assuming there’s not much of an audio delay, a potential issue that came up several times just in the audio-to-text realm at GDC this summer, one major concern should be just how feasible this would be at launch, given Intel’s reference to the GDC 2019 presentation on using the tech just for detecting harmful speech.

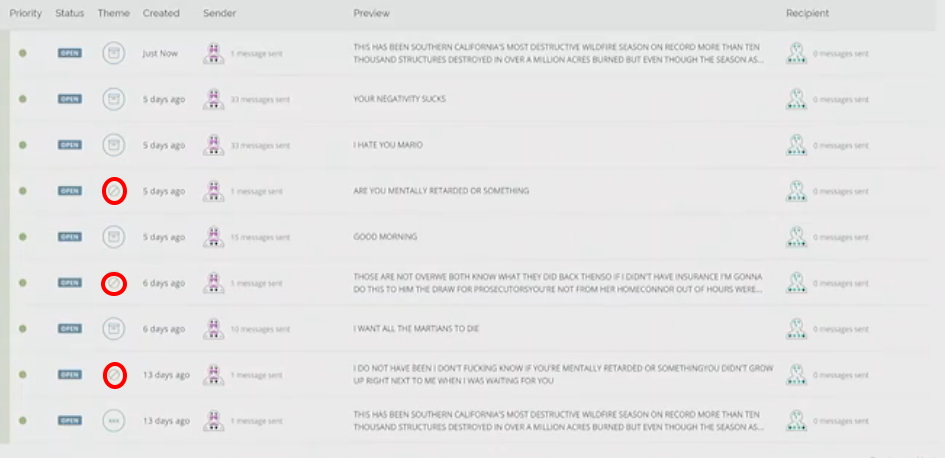

If you’d like to watch the original discussion about this kind of tech from GDC 2019, the relevant section starts around the 18:40 mark, and about a minute later the speaker notes that technology alone probably isn’t enough to curtail this kind of thing, that incentives and enforcement may be a better method – the tech then was just meant for detection. From the above image, you can see that the proto-Bleep does seem to be able to detect genuine aggression, at least in some cases, as “I hate you Mario” passes but what looks like an excited rant triggers the filter.

But “I want all martians to die” does nothing to trigger the filter, as that could be perceived as threatening just with the word “die” but could also be lingo in a game for a certain faction, which could make that statement a threat toward martian players. Context is very important in speech, so detecting things like white nationalism (“milk” for literal product vs. coded speech), flirting (may set off filters even in consensual situations), friends smack-talking, and roleplaying the bad guy are all really hard for AI to comprehend. And that doesn’t even include multiple perspectives, such as if two people are “jokingly” racist and it offends a third party. The product is admittedly only supposed to be a step toward ending toxicity.

The demo UI we were shown this year has a long list of potential categories and even scales for some topics rather than a binary choice between off and on. However, without any kind of demonstration, it’s difficult to imagine how well Bleep is working in its current form. We reached out to Intel for more information, but were told there were no demo videos yet and that some may appear as the product enters beta ahead of its planned launch this year.