The circle is now complete: Social media has mastered skills MMOs and online games haven’t, not that social media was paying much attention to the fact many of its problems were problems from MMOs, MUDs, and other online games first.

That doesn’t mean it’s too late for game devs, though. Daniel Kelley, the Associate Director for the Anti-Defamation League’s Center for Technology and Society, held a GDC Summer 2020 talk that will most likely be ignored by many large AAA studios, but at least we gamers may be able to recognize Kelley’s points on a more conscious level and shake our heads in unison at upcoming fails or (if I’m being optimistic) cheer on smaller teams that start tackling these problems before they become problems instead of pretending they don’t exist.

War of words

While social media may not be games, they are digital social spaces, which games all have, whether they’re chat rooms hosting MUDs, lobbies for shooters, or Orgrimmar-esque capital cities where 3-D avatars can dance on mailboxes and undercut everyone else’s copper bars prices. In fact, especially during COVID, games may be swinging back to reclaim their space, as younger generations are turning more to games that are accessible across a myriad of devices and work across platforms. Even if you’re not Fortnite, other popular games such as Animal Crossing: New Horizons certainly perform this role. In fact, ACNH has been a host to protests, talk shows, even talk with politicians. We can socially distance while also feeling present. Awesome.

But even within the world of Animal Crossing, not everything is necessarily wholesome. Sure, laugh about the penis fireworks, but if a kid wanders onto your island and the parent sees your dicks and doesn’t laugh, it’s bad news.

Now imagine if things were more nefarious. Maybe people spread hateful messages or misinformation. Social media platforms are increasingly being held accountable for hosting these kinds of messages by governments around the world, and if game companies don’t learn from this, they may be next.

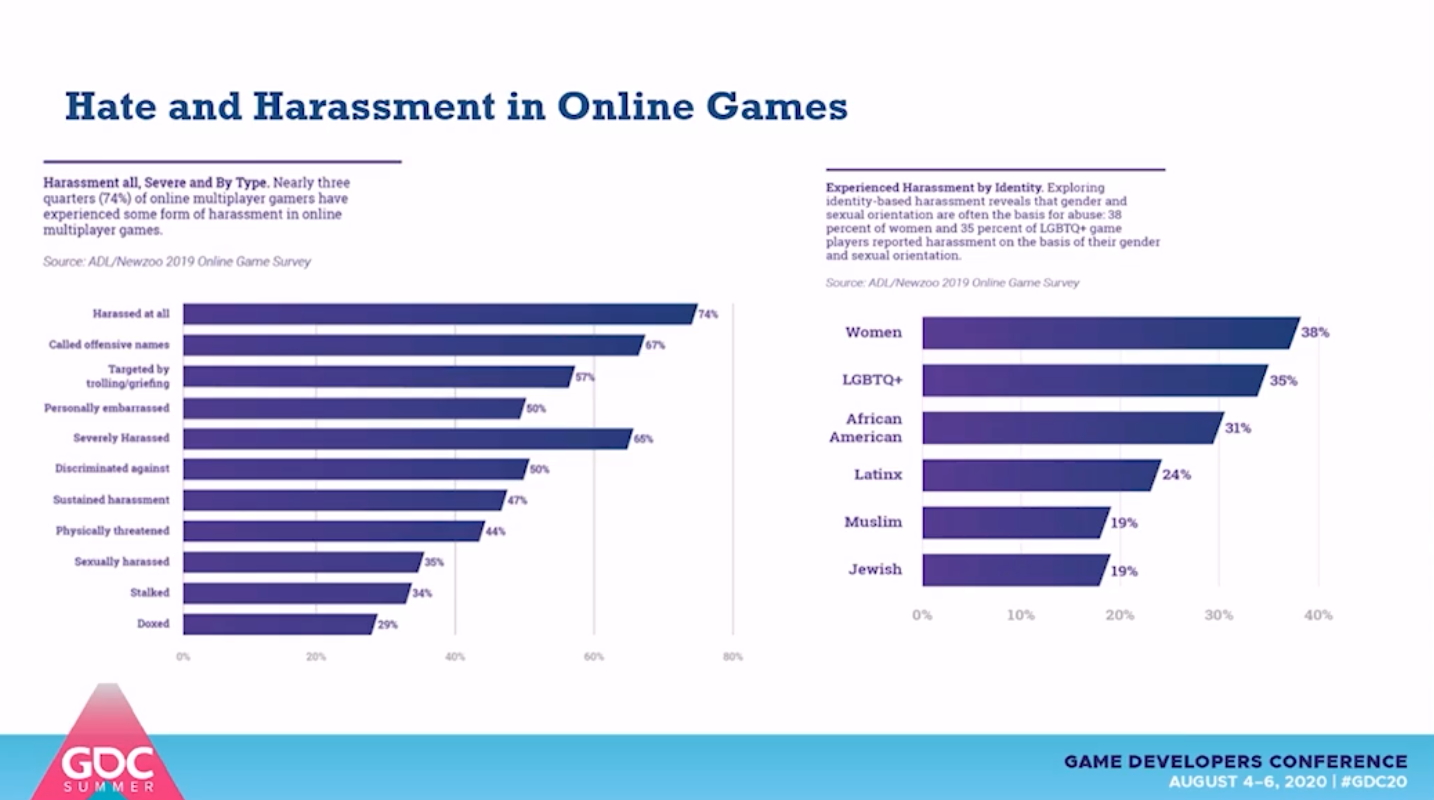

It’s a bit hard to read, but Kelley’s group found that 74% of the people they spoke to had experienced some kind of memorable harassment while playing online games. While I’ve been more lenient with whom I visit in ACNH these days and have certainly had a few unsavory run-ins, I haven’t really even felt like I’ve had the urge to report someone. But better to be safe than sorry these days.

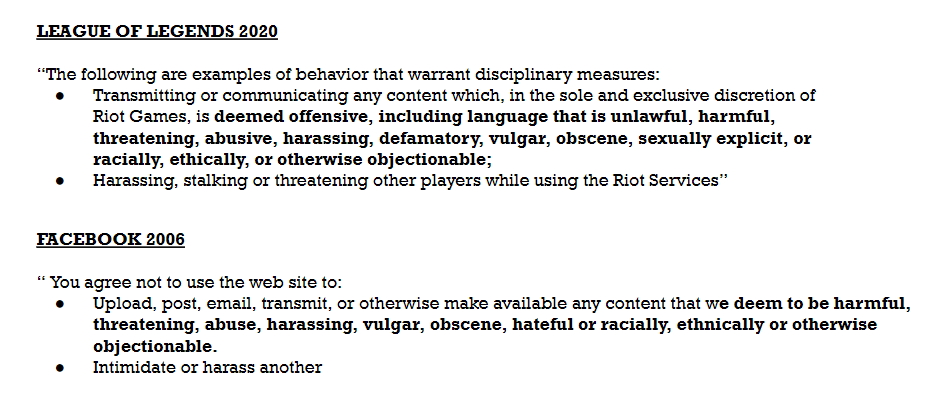

Terms of Use or Codes of Conduct may make companies feel safer against being held liable, but the wording of the past may not be enough. Notice how generalized the text below is:

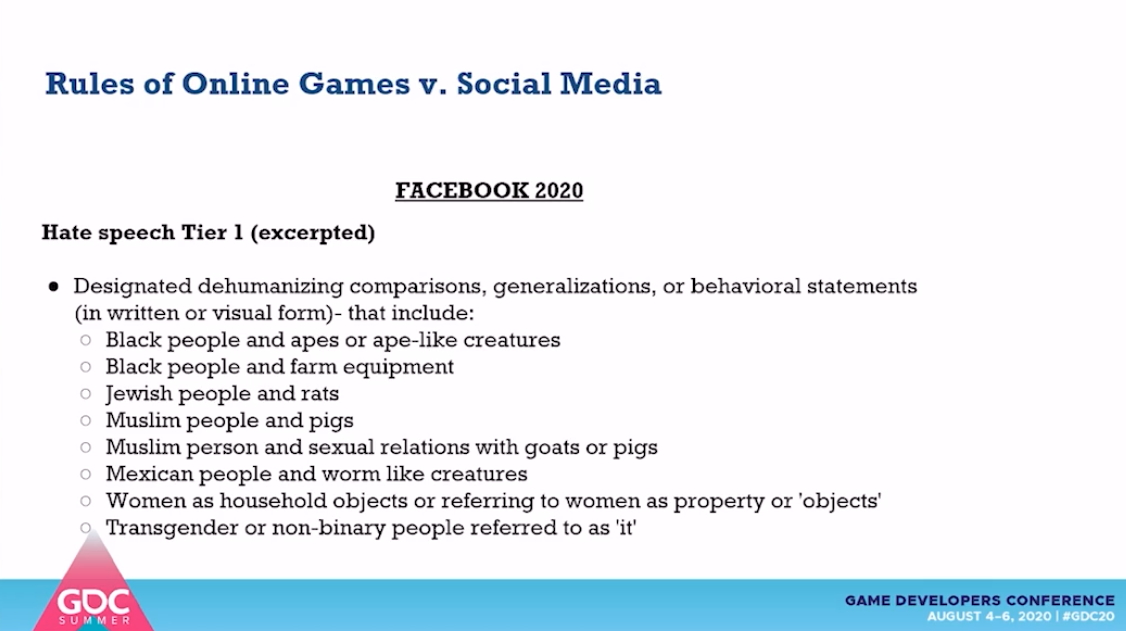

Now notice how League of Legends, a game many people probably know for its toxic seeming environment, is pretty vague about those non-permissible terms. Let’s compare that with just a fraction of what Facebook has to cover its butt in 2020:

Look at how specific and granular the approach is – and this is only an excerpt and only part of tier 1 hate speech the company chooses to specify. I’m a vocal critic of Facebook, but even I have to applaud this (jut not at the way the platform turns a blind eye if they’re receiving enough money from the source).

But let’s just say “so what?” for a moment. Why not just ask people to mind their own business?

“We’re a video game, not a political platform. We don’t take responsibility for what our users say. Free speech.” As one developer noted, all the ethical considerations are directly at odds with the agile processes common in tech. Our own community here has talked about this in relation to how new tech needs to consider these past mistakes, but to paraphrase Ian Malcom from Jurassic Park, I’ve found that many people in tech are so preoccupied with whether or not they could, they didn’t stop to think if they should. So, for the sake of conversation, the warnings and past mistakes others executed are ignored, experts aren’t consulted, and we hit our next snag.

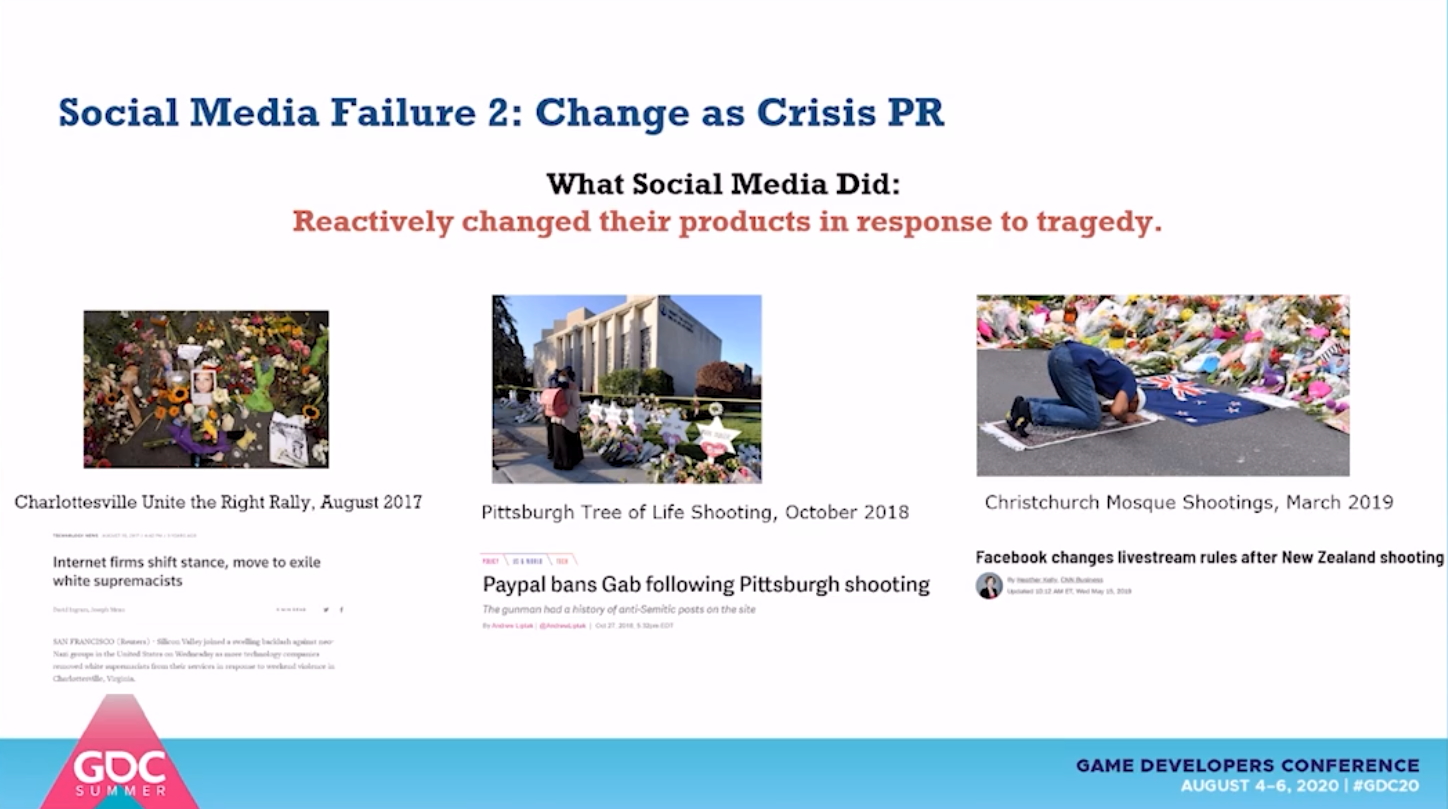

Maybe tragedy strikes after that. People who carry out an attack such as those in the slide might have met, talked, or even recruited people for it in your game. Instead of being pro-active and having plans and teams set up to develop and maintain a safe online space, you suddenly have branding images failures, you get kicked off social media, you lose advertising, maybe you even have the government come in and ask to look at your data.

Kelley argues that in order to avoid this, not only should studios have had some important chats, but they should be keeping track of the extremists in their virtual space, as weird as that sounds. Seek to protect those they are trying to harm, and call in civil liberty groups used to helping with these sorts of things. Social media groups, by and large, have failed at this.

So now you have game designers being asked to share metrics they don’t have. And they’re being asked this by the government, live on TV. It’s not like you’re a local bowling team; you’re a tech company. You should have these data somewhere; you just need to keep records of it, right? And when you tell the government and everyone watching at home that you weren’t keeping track of that, well boy, you look pretty irresponsible, don’t you? Someone should come in and handle this because clearly you can’t be trusted.

Ugh. None of us wants that. Think about how badly CEOs and even mid-level designers defend poor design choices and monetization. That’s the shame/outrage we deal with as gamers in the gaming community. We don’t want the rest of the world seeing those clowns and thinking those are the best our community produces, right? So we need to ask these hard questions to our community managers.

Kelley suggested that report forms should include the name of avatars harassed, and studios use the data gathered to help make changes that help affected communities, then track the data so they can give transparency reports about who is being targeted and what the team can do about it. That way, if something does go wrong and some poor lootbox-promoting CEO gets called in for public questioning, at least she’ll have data that show that the company was at least making an effort. People are more forgiving if it looks like you just missed a turn rather than fell asleep at the wheel.

To note, Kelley says that the group’s survey “found 23% of respondents experienced some kind of discussion about white supremacy in online games,” but they “can’t go as far as saying it was recruitment or understand how it contributed to radicalization.” While 88% of people surveyed had positive online experiences, such as making friends, game companies still need to be careful, as data about potential extremists are stacking up.

For example, Kelley said the ADL recently “released a report that looked at the phenomena of white supremacist activity on Steam, and quite easily found a wide variety of white supremacist ideology on the platform.” The fact that certain companies are now removing OK emotes probably means other companies have seen similar data themselves.

Currently, according to Kelley, no major online game has an explicit policy related to extremism in its community. The closest is Roblox, of all games, whose policy around “dangerous, unethical and illegal activities” is this: “Not only do we not allow these activities, we do not allow anyone discussing them or encouraging others to discuss (or take) such actions, including: and lists Terrorism, Nazism or Neo Nazism, organized criminal activity, gangs and gang violence; among the behaviors.”

“Additionally, as no game company has released a transparency report yet, it’s impossible to know the prevalence of the problem of extremism and what is effective in addressing it in online games,” Kelley says. And this is mostly in relation to visual moderation.

Those grayer areas

Of course, there are some topics that games may be wrestling with as social media also does. For example, while visual chat and images have tons of tools to help with moderation, audio chat has far fewer. Clearly more work is in order here, since if people feel a certain outlet is unmoderated, abusing that channel and its users becomes the norm (which is probably why many of us give up on voice chatting with strangers quite quickly).

Kelley also noted the issue with esports stars and influencers. He notes that they have a wide reach and are primarily used as marketing, but they also set a tone for the community with their behavior.

While someone asked if transparency on policies can come back to bite companies, Kelley admitted that the answer is “maybe,” but he also noted that not doing anything does allow griefer types the opportunity to “take advantage of new ways for people to connect.” It’s better to be transparent and to show that your company is trying to protect people than create a shadow of a doubt that problem people are welcomed in your virtual space.

That being said, you also need to walk the line with how transparent you are. People need to feel that your company is doing something about bad apples, but public shaming may not be the best solution.

There’s so much going on, and players and press really can’t do much more than voice concerns and dredge up past mistakes we see current devs make. However, for people going into law who may want to help make a difference, Kelley recommends checking out Danielle Citron’s Hate Crimes in Cyberspace and Kate Klonick’s The New Governors as a first step.

Massively OP’s Andrew Ross is an admitted Pokemon geek and expert ARG-watcher. Nobody knows Niantic and Nintendo like he does! His Massively on the Go column covers Pokemon Go as well as other mobile MMOs and augmented reality titles!

Massively OP’s Andrew Ross is an admitted Pokemon geek and expert ARG-watcher. Nobody knows Niantic and Nintendo like he does! His Massively on the Go column covers Pokemon Go as well as other mobile MMOs and augmented reality titles!