It’s nearly impossible to be a gamer in 2019 and not be at least familiar with Discord, the ubiquitous chat program that’s replaced, in turns, voice chat, text chat, even guild chat, and for some folks, Steam. That also means it’s a prime destination for the worst kinds of humans. And you might be wondering what exactly a company with 250M users does to address that. This is what Discord’s latest transparency blog post is all about.

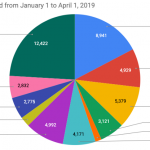

The company says it received over 50,000 reports in just the first three months of 2019, as users dispatched complaints on everything from harassment, threats, and doxxing to self-harm and hacking and spam.

“In the investigation phase, the Trust and Safety team acts as detectives, looking through the available evidence and gathering as much information as possible,” Discord says. “This investigation is centered around the reported messages, but can expand if the evidence shows that there’s a bigger violation — for example, if the entire server is dedicated to bad behavior, or if the behavior appears to extend historically. We spend a lot of time here because we believe the context in which something is posted is important and can change the meaning entirely (like whether something’s said in jest, or is just plain harassment).”

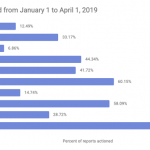

Punishments come in multiple tiers, starting with just removing content and warns and escalating to permabans – or reports to police. According to Discord, most spam reports check out and are summarily actioned, but others, like self-harm and “exploitative content” complaints, often result in no action, either because they’re fake, malicious, mislabeled, missing info, or insufficient for punishment. The numbers seem to show that spam is the biggest problem by kind of a lot – and the remaining toxicity is much smaller than you might guess.

“For perspective, spam accounted for 89% of all account bans and is over eight times larger than all of the other ban categories combined. […] Amongst all other categories, we banned only a few thousand users total. Relative to our 50 million monthly active users, abuse is quite small on Discord: only 0.003% of monthly active users have been banned. When adjusted for spam, the actual number is ten times smaller at 0.0003%.”